Opening the black box

ASU researchers innovate decision-making in design space exploration

Every second of the day presents us with choices, from deciding what to wear in the morning to picking from a menu at dinner. Whether a decision is trivial or life-altering, decision-making is a fundamental element of the human experience.

It’s always easy to question whether a person made the right choice. Sometimes it’s impossible to tell until consequences are revealed later.

In hardware and software architecture domains, engineers use a technology called design space exploration to assist in evaluating choices during the computer architecture design process to identify the best-performing design among the available options.

Design space exploration technology can choose a preferred option based on desired outcomes like speed, power consumption and accuracy. The technology can be applied to a variety of applications, from object or human recognition software to high-level microelectronics.

Deep learning, a method in artificial intelligence inspired by the human brain, teaches computers to process data. Designs of deep learning accelerators, which are computers that specialize in efficiently running deep learning algorithms for artificial intelligence, rely on design space exploration to choose from their extensive lists of options. Because some of these accelerator designs have billions upon billions of choices to evaluate, existing processes for optimization can take days or even weeks to complete, even when evaluating only a small fraction of the choices.

The process is further complicated by black box explorations, which deep learning accelerators rely on to make decisions. Black box explorations are designed to process information without revealing any details about their reasoning.

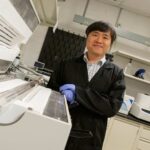

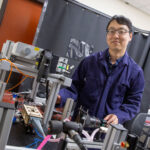

Shail Dave, a computer engineering doctoral candidate in the School of Computing and Augmented Intelligence, part of the Ira A. Fulton Schools of Engineering at Arizona State University, is working to fix this problem with explainable design space exploration, a framework of algorithms and systems that will enable researchers and processor designers to understand the reasoning behind deep learning accelerator designs by analyzing and mitigating the bottlenecks slowing down the process.

Delving into decision-making

“Typically, hardware and software designs are explored and optimized through black box mechanisms like evolutionary algorithms or AI-based approaches like reinforcement learning and Bayesian optimization,” Dave says. “These black box mechanisms require excessive amounts of trial runs because of their lack of explainability and reasoning involved in how selecting a design configuration affects the design’s overall quality.”

By streamlining the accelerator’s decision-making process, Dave’s research allows design methods to make choices much faster, taking only minutes compared to the days or weeks it can take existing models to process this information. As a result, design optimization models are smaller, more systematic and use less energy.

Dave’s research offers an alternative that not only improves search efficiency, but also helps engineers reach optimal outcomes and get insights into design decisions. By understanding the reasoning behind design choices and related bottlenecks, the method can analyze available design points at every step of the process and determine the good and bad options before offering its decision, which is made deliberately by the technology after evaluating the most promising options available.

Dave notes that his algorithms can also fine-tune design parameters in various use-cases. For example, the algorithms can budget power in batteries for maximum efficiency in devices ranging in size from a smartphone to powerful processors in a supercomputer, while finishing the application processing in the desired time.

Most notably, Dave’s algorithm can explore design solutions that are relevant to multiple applications, such as those that differ in functionality or processing characteristics, while also addressing their inefficiencies in executing the product. This is important because many of today’s artificial intelligence-based applications require multi-modal processing, which means one accelerator design may need to be used to complete multiple tasks.

“The great thing about this work is that it formalizes how to leverage a system’s information for making informed decisions and can be applied across a wide variety of uses and different industries where these target systems need improvements and explainability in the process,” Dave says. “We’re analyzing how to get the best performance for any given architecture configuration with different constraints, depending on what the user prioritizes as the most important need.”

Dave’s research has been honored with the second place award in the graduate category of the 2022 Association for Computing Machinery Student Research Competition, complete with a silver medal. Photo credit: Erika Gronek/ASU

Presenting algorithm findings

Dave’s paper on this research, titled “Explainable-DSE: An Agile and Explainable Exploration of Efficient Hardware and Software Codesigns of Deep Learning Accelerators Using Bottleneck Analysis,” has been accepted into the Association for Computing Machinery’s 2024 International Conference on Architectural Support for Programming Languages and Operating Systems, or ASPLOS, a premier academic forum for multidisciplinary computer systems research spanning hardware, software and the interaction between the two fields.

In addition, Dave’s research was awarded second place in the graduate category of the 2022 Association for Computing Machinery Student Research Competition, recognizing his work as being among the most important student research in computer science and engineering in the world.

Aviral Shrivastava, a professor of computer science and engineering in the Fulton Schools and Dave’s mentor and collaborator on the research, says he is proud to see Dave celebrated for his hard work.

“It takes a very motivated student to achieve this kind of success,” Shrivastava says. “There is so much thought, intricacy and detail that goes into research like this. You really need to work with strong researchers, and Shail has been one of them on my team.”

Real-world applications

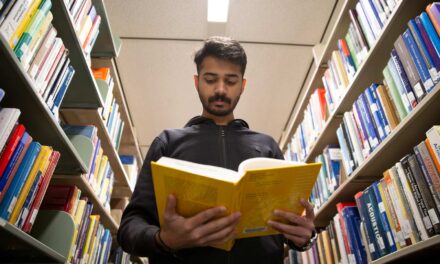

Dave and Shrivastava are also working together to apply this research to the semiconductor industry with the Artificial Intelligence Hardware program for the Semiconductor Research Corporation, which addresses existing and emerging challenges in information and communication technologies and supports research to overcome the challenge of transforming the designing and manufacturing of future microchips.

Shrivastava notes that one of the beneficial real-world impacts of this research is that it drastically reduces the energy requirements and carbon footprint produced by this work compared to that of existing models and processes.

“Training neural networks can have the same carbon footprint as a trans-Atlantic flight,” Shrivastava says. “Being able to reduce the overall carbon footprint by making this technology more efficient will have a great global impact.”

With its significant positive environmental impact and ability to guide decision-making processes more effectively, Shrivastava sees the impact of the research having implications across almost all fields and industries.

“By designing these accelerators in a more formal, systematic and automatic way, we are saving people from endlessly searching through space without any guidance,” he says. “This technology offers an introspective, intelligent and insightful way to make sense of all the information available.”