ASU receives three DEPSCoR awards for research critical to national security

Computer science faculty members will create cybersecurity, artificial intelligence and robotics solutions

Three researchers in the Ira A. Fulton Schools of Engineering at Arizona State University have received grant awards under the Defense Established Program to Stimulate Competitive Research, or DEPSCoR, from the U.S. Department of Defense, or DOD. As part of the program, the U.S. DOD awarded $17.6 million to 27 academic teams to pursue engineering research relevant to the nation’s science and technology missions.

ASU was one of only three universities to receive multiple awards. Adil Ahmad, Rakibul Hasan and Nakul Gopalan, faculty members in the School of Computing and Augmented Intelligence, part of the Fulton Schools, will each receive up to $600,000 over a three-year period to pursue research in cybersecurity, cyber deception and artificial intelligence, or AI, solutions in robotics.

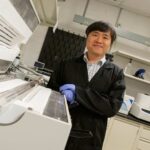

Computers create logs of events that happen on their systems. With the DEPSCoR grant, Adil Ahmad, a professor of computer science and engineering in the Fulton Schools, and his team seek to revolutionize computer logging infrastructure. They will work to leverage logs to enhance cybersecurity. Photographer: Kelly deVos/ASU

Adil Ahmad enters new grant into the log

Logs play a critical role in cybersecurity, one that is essential to attack investigation and the forensic analysis of computers.

And it’s crucial that researchers get the message because it comes from the top.

In 2021, the White House ordered the Cybersecurity & Infrastructure Security Agency, or CISA, to create standards and guidelines for computer logging. In the wake of increased attacks on the technological infrastructure of federal and state governments, and following a series of high-profile hacks, including the leak of more than 600,000 e-mail addresses of employees working at the Department of Justice and the U.S. DOD in 2023, the mission has become even more urgent.

Adil Ahmad, an assistant professor of computer science and engineering in SCAI, has been tasked with revolutionizing computer logging infrastructure.

A log is a record that tracks events that occur on a single computer or a network of machines. A log might contain information about users trying to access a website or download a file from the cloud.

Ahmad considers logs a dual-purpose tool. On the one hand, logs can play a forensic function, allowing forensic analysts to investigate what happened following a data breach. On the other hand, it may play a defensive function, helping information technology administrators prevent hacking.

Robust logging practices can stop attacks in progress because, today, due to advances in cybersecurity, breaches take place slowly, over a long period of time.

“Under typical attacks, adversaries don’t instantaneously get access to highly secured computer systems,” Ahmad says. “A hacker might send a network packet to infect some very low-privileged software on a computer. Once they do that, they will then work piece-by-piece, slowly trying to compromise other, more sensitive parts of the system.”

He will work with Ruoyu “Fish” Wang, an assistant professor of computer science and engineering, to create a new framework for logging. The researchers plan to make tools that are easier to configure and logs that are easier to analyze which they believe will be more useful in helping protect systems from attack. Crucially, their work also involves securing the logs themselves against hacks.

Ahmad adds that he thinks their framework will make an important contribution to cybersecurity, saying, “We hope to transform logging into a first-class defense operative against cyberattacks on mission-critical computers.”

Rakibul Hasan, an assistant professor of computer science and engineering, will study dangerous hackers and create personalized, real-time cyber deception systems to secure government computer infrastructure against attacks.

Rakibul Hasan will use cyber deception for cyber defense

Rakibul Hasan, assistant professor of computer science and engineering, has a plan to keep government and military computer infrastructure safe by learning to think like the people who want to attack it.

“This research is highly relevant to the U.S. DOD’s mission, offering solutions that address current challenges to thwart attackers who employ sophisticated attack strategies that can also change on the fly,” Hasan says. “We’re hoping to help optimize the performance of existing cybersecurity strategies by creating defenses tailored to specific attackers, learning how their personalities might dictate how they launch cyberattacks.”

The study and effective use of cyber deception is becoming more necessary in the wake of significant spikes in cybercrime. For computer scientists, cyber deception is both a detective and defensive technique. They often place sets of decoy assets, such as a fake server or a file containing phony client passwords or social security numbers, known as honeypots, on networked computers and watch to see who tries to access them. This process enables engineers to stop attacks in progress and spot ways that their systems are vulnerable.

Hasan will work with Tiffany Bao, an assistant professor of computer science and engineering, to research the personality traits of hackers and create technology for personalized cyber deception tools.

The team will study the attackers’ personality traits — such as fluid intelligence, problem-solving capabilities and decision-making abilities while under pressure as well as ambiguity tolerance — and determine how these traits causally influence attack strategies.

Armed with that information, Hasan will create systems that predict future attack behavior and craft better defensive mechanisms, such as personalized honey pots.

“Our goal is to dynamically create and deploy a deception strategy that has the most potential to prevent an attack in real-time based on the attacker’s personality, network characteristics and other contextual factors,” Hasan says.

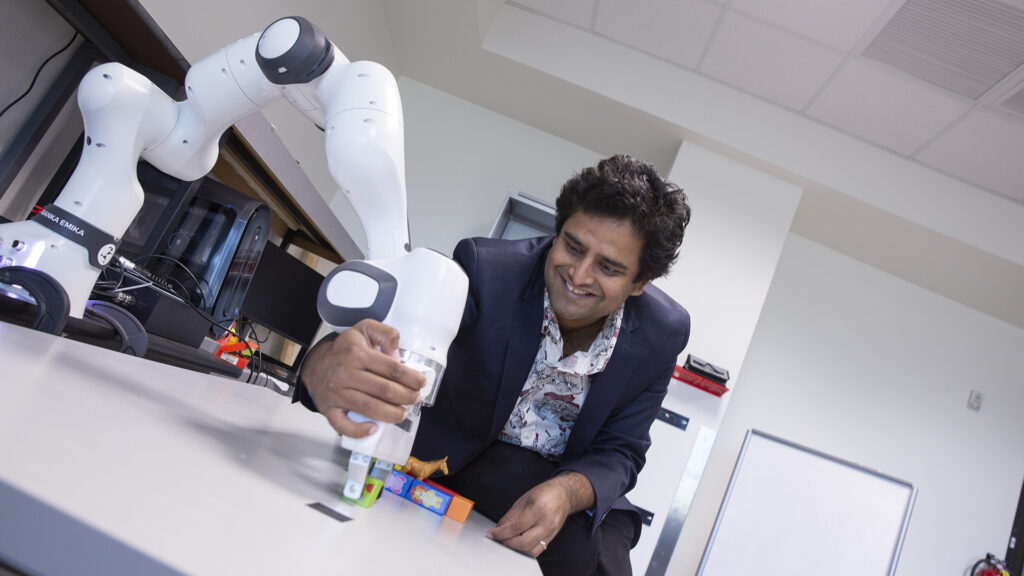

Nakul Gopalan, an assistant professor of computer science and engineering in SCAI, demonstrates the robotic imitation learning technique that his new artificial intelligence work might one day replace. Photographer: Erika Gronek/ASU

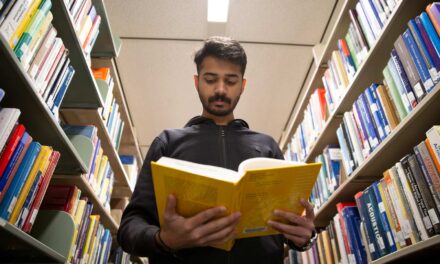

Nakul Gopalan will create AI to train robots to read instruction manuals

Human beings might want to boldly go where no one has gone before, but one of the promises of robotics is that we can send machines where we don’t wish to be: to the cold, empty reaches of outer space; into situations that are dangerous and threatening; and down into the deepest, darkest depths of the ocean.

But when they get there, how will the robots know what to do?

Nakul Gopalan, an assistant professor of computer science and engineering, says that, currently, many robots are trained using what is referred to as imitation or observational learning. A robot could watch a human picking up a cup and then be taught to mimic that gesture.

“But we realized that the DoD might have lots of equipment, like cars and radios, out in the field, perhaps in dangerous areas,” Gopalan says. “It could be desirable to have a robot out operating this machinery but not to endanger a person by sending someone out to do the training.”

Gopalan will work as part of a team with Siddharth Srivastava, an associate professor of computer science and engineering. They will combine different types of artificial intelligence, or AI, especially machine learning and the use of large language models to train robots to read instruction manuals.

The work has two parts. First, the team must train the robot to understand plain language.

“Human language is very abstract,” Gopalan says. “If you tell a robot to go to the library, how does it know what a library is? Or how fast to go?”

He and his team will create AI-based language models that can be used to break down instruction manuals and train robots to understand plain language concepts. They will then create software that translates the manuals into machine-level instructions. The robot will basically learn to read manuals and then operate the equipment.

Granting a promising future in engineering research

Gopalan sees the grants as a nice validation of the work being done in SCAI.

“It’s kind of special that we have three awards,” he says. “It means we’re hiring the right types of people in AI and cybersecurity and that we have developed real strength in those areas.”

Ross Maciejewski, director of the School of Computing and Augmented Intelligence, confirms that assembling a strong faculty team in critical fields is an important part of the school’s commitment to serving as a pipeline for engineering talent.

“These multiple awards reflect the high quality of the research being done by our faculty,” Maciejewski says. “This, in turn, makes the Fulton Schools an in-demand destination for all kinds of learners and enables us to provide a quality educational experience for our students.”