Innovations in imagery

Experiential computing research is revealing ways to integrate more realistic virtual objects into actual environments

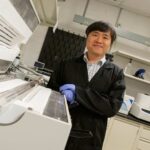

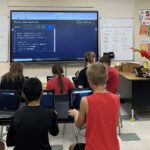

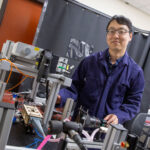

Above: Students involved in research directed by Assistant Professor Robert LiKamWa are designing software and hardware systems and boosting the performance of experiential computing applications in virtual and augmented reality systems. Photo courtesy of METEOR Studio/ASU

Robert LiKamWa sees a future in which the next generations of visualization technologies will enhance our experiences in an ever-increasing number of pursuits.

Applications of virtual and augmented reality technologies already significantly impact education and technical training of all kinds — especially online teaching and learning.

Engineering, science, communications, arts, culture and entertainment media such as movies and video games are all beginning to benefit from advances in tools that generate 2D and 3D images that can be integrated into actual physical settings, says LiKamWa, an assistant professor at Arizona State University.

Robert LiKamWa, an assistant professor of electrical engineering and arts, media and engineering, leads research on embedded optimization for mobile experimental technology at Arizona State University. Presentations on two of his lab team’s projects will be given at MobiSys 2019, the leading international conference on mobile systems science and engineering.

He directs METEOR Studio. METEOR stands for Mobile Experiential Technology through Embedded Optimization Research. The lab focuses on designs for software and hardware systems to improve the performance of smartphones, tablet computers and other mobile communication devices. The designs are also used on applications of experiential computing — meaning virtual and augmented reality systems.

LiKamWa has faculty positions in the School of Electrical, Computer and Energy Engineering, one of the six Ira A. Fulton Schools of Engineering at ASU, and the School of Arts, Media and Engineering, a collaboration of the Fulton Schools and ASU’s Herberger Institute for Design and the Arts.

From those schools he has drawn more than a dozen students to the METEOR Studio lab, where some are at work on projects to develop, fine-tune and expand the capabilities of computer systems to create new and improved forms of integrated sensory immersion.

Examples of the team’s progress will be highlighted at MobiSys 2019, the annual ACM International Conference on Mobile Systems, Applications and Services, June 17 to 20 in Seoul, South Korea. MobiSys is presented by the Association for Computing Machinery, the world’s largest scientific and educational computing society.

Presentations on two projects directed by LiKamWa are on the agenda. It’s an exceptional recognition of what his group is accomplishing, given that conference leaders have typically accepted fewer than 25 % of presentation proposals throughout the event’s 17-year history.

LiKamWa will present “GLEAM: An illumination estimation framework for real-time photorealistic augmented reality on mobile devices,” a project led by graduate student Siddhant Prakash, who earned a master’s degree in computer science from the Fulton Schools in 2018.

Computer engineering doctoral student Alireza Bahremand, recent computer science graduate Paul Nathan and recent digital culture graduates Linda Nguyen and Marco Castillo also worked on the project.

GLEAM is short for Generates Light Estimation for Augmented Reality on Mobile Systems. The project involves an augmented reality application enabling the placing of virtual objects into physical spaces and illuminating those objects as they would be in those actual environments.

“This is about estimating the light in a space more accurately and robustly,” LiKamWa says. “People have been doing this for a long time in post-production for computer-generated imagery in the film industry but we are bringing this capability to real-time mobile augmented reality systems and doing it in a way that improves the scene’s realism.”

With current systems of this kind, using even low-resolution virtual images requires a large amount of energy, he says, “so we have developed some tactics to actually reduce the energy use but still get better performance from the technology.”

Computer engineering doctoral student Jinhan Hu will present “Banner: An Image Sensor Reconfiguration Framework for Seamless Resolution-based Tradeoffs.”

Recent computer science graduate Saranya Rajagopalan and undergraduate electrical engineering student Alexander Shearer also worked on the project.

Banner involves technology that enables smartphones, headsets, tablet computers and similar devices to adapt camera settings to the needs of applications of computer vision and augmented reality to render images in appropriate resolutions for specific purposes.

Applications may need high-resolution image streams from the camera to capture distant or detail-intensive features of a scene, LiKamWa says, but they would be able to save energy and boost performance by capturing images at low-resolution settings when possible.

The two images of a small metallic elephant figurine demonstrate a technique using GLEAM to provide high-quality illumination effects in augmented reality scenes. The image on the left shows the actual figurine. The image on the right is an augmented reality image. Work by LiKamWa’s research team is enabling the light reflections in the virtual image on the right to closely approximate the lighting on the actual object on the left. Photo courtesy of METEOR Studio/ASU

However, when applications try to change resolutions of image streams from a camera, mobile operating systems will drop frames, causing interruptions in the imaging.

With the Banner system, images at differing resolutions are captured and rendered seamlessly, with no loss in performance for app developers. At the same time, the system prolongs battery life by enabling low-resolution image streams.

“What we are doing is rearchitecting the parts of the operating systems, the device drivers and the media frameworks, so that we can actually change the resolution without dropping any frames, not even one frame,” LiKamwa says. “So you get a completely smooth resolution without any loss in the user experience.”

LiKamwa and his lab teams have come up with “two very impressive, elegant, technically demanding, well-implemented and well-evaluated solutions to very different problems,” says Rajesh Balan, an associate professor of information systems at Singapore Management University and a program committee co-chair for the MobiSys Conference.

The GLEAM project is a definite step forward in enabling “much more realistic scenes” using augmented reality imaging, while the Banner project “has high value for any application that processes a large number of photo or video images — for example, face recognition applications on mobile phones,” Balan says.

Balan’s fellow MobiSys 2019 program committee co-chair, Nicholas Lane, an associate professor of computer science at the University of Oxford in England, says LiKamWa is working at the forefront of research poised to produce “powerful mobile vision systems with capabilities that were until recently the domain of movies and science fiction. His work stands out because it rethinks how core aspects of these devices must function so they can better cope with the demands of high-fidelity processing and understanding of visual input.”

LiKamWa “has brought great energy to Arts, Media and Engineering,” says the school’s director, Professor Xin Wei Sha. “He is inspiring students and colleagues alike and his METEOR Studio is blossoming with good students and innovative engineering research projects like GLEAM and Banner that are exploring fundamental experiential and technical aspects of mobile technologies and setting the stage for advances five to 10 years into the future.”

LiKamWa’s research, which has earned support from the National Science Foundation and the Samsung Mobile Processing and Innovation Lab, has led to provisional patent applications on the GLEAM and Banner technologies. His team will be releasing software frameworks for application developers to integrate these technologies into their solutions.

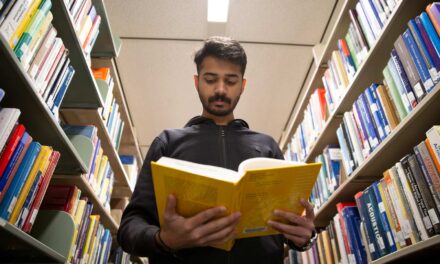

LiKamWa’s METEOR Studio team is developing augmented reality capabilities that enable more realistic integration of virtual images into actual environments. In this photograph, the stovetop is real but the pieces of cookware are virtual objects. Photo courtesy of METEOR Studio/ASU