Will your body be safe from hackers? Engineers consider the next big information security issue

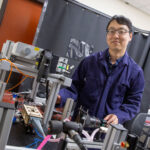

rishna Venkatasubramanian (standing) and Sandeep Gupta

April 16, 2007

Imagine it’s the year 2020. A heavy-set, 40-something woman sits down for a job interview with a potential employer. Like most people, her health is being constantly monitored by tiny sensors dispersed throughout her body. The pill-sized nodes send out data to a larger computer chip woven into her shirt.

Overweight and diabetic, the applicant is worried about how her appearance will influence the interviewer’s perception. She is glad there are laws that ensure a fair opportunity, not allowing the prospective employer to ask about her medical conditions. But unbeknown to her, the interviewer, perched behind a computer, is hacking into her body systems network and gathering her medical data.

This futuristic scenario is actually not far off, says Sandeep Gupta, an associate professor in the School of Computing and Informatics in the Ira A. Fulton School of Engineering.

“We already have biomedical devices such as artificial pacemakers.” says Gupta. “The technology is developing, and I think people will use new technologies that provide a demonstrative benefit to them. That is why people get artificial hips and LASIK eye surgery.”

But Gupta and graduate research assistant Krishna Venkatasubramanian question the security of a “body sensor network.” In December 2006, their research paper, “Security for Pervasive Health Monitoring Sensor Applications,” won the Best Paper Award at the Institute of Electrical and Electronics Engineers’ (IEEE) Fourth International Conference on Intelligent Sensing and Information Processing, held in Bangalore, India. In the paper, Gupta and Venkatasubramanian propose a solution to the vulnerability of information exchange between wireless biomedical sensors.

Biomedical sensors, sometimes called “smart sensors” are, in essence, computer chips fused with a sensor. They are very small and use little energy. They are primarily data collectors. Used in body sensor networks they gather information about such things as heart rate, blood pressure and insulin levels.

These sensors are also communicators, sending data out to a larger computer — such as your doctor’s personal computer — through a “base station,” such as a computer chip woven into your shirt, or into a watch.

Because of the low energy capacity of individual sensors, it is impossible for a sensor in one’s leg, for example, to communicate directly to the base station. This means sensor-to-sensor communication is imperative, and this is where the problem lies.

In Gupta and Venkatasubramanian’s model, sensors form “clusters,” within which there is a self-designated leader that has the responsibility of sending out data to the base station. By forming clusters, the amount of information being sent out is reduced. The effect is the same as when, for example, someone sends out one e-mail with five attachments rather than five separate emails. The “leader” node or sensor of any particular cluster is one that is close in proximity, establishing its role by sending out a signal to nearby nodes.

In a case of information theft, someone who wanted the information gathered by your bodily sensors could send out a wireless signal to the sensors in your body — identifying itself as the “leader.” Despite its remote location from the body, the strength of the signal would indicate proximity to all of the sensors, which would then transmit data to the hacker.

This situation can prevented if cryptographic “keys” are used for encryption and decryption (jumbling and re-ordering) of data.

“Keys” are long random strings of numbers that when plugged into an algorithm can unscramble encrypted data. Before any communication between sensors takes place, the sender and the receiver node must be made aware of the key, or the aforementioned scenario could take place. This “key distribution” must also be done securely.

There are several ways that a key can be distributed to the sensor nodes.

One technique is called “pre-deployment,” in which case the key is embedded in the individual sensors at the time they are implanted. This technique saves energy because the key doesn’t need to be communicated, but it also presents a large threat to security. If the key is discovered by a third party, the security of the whole body systems network is immediately compromised.

In another technique, the “leader” sensor decides the key for all nodes in its cluster. The individual sensors would then communicate the key to each other before sharing data, still leaving open the possibility of the key being discovered by an outsider.

There is another, more secure technique, fleshed out by Gupta and Venkatasubramanian in their paper.

“We wanted to find a technique that would exploit the properties of the environment — the body — and derive these keys in an energy-efficient manner,” says Gupta.

In Gupta and Venkatasubramanian’s proposed solution, two sensors wishing to communicate would simultaneously measure the same physiological property, such as the inter-pulse-interval (the difference in the value between two pulse rates). This random number would then be put through some sort of algorithm, and then voil