ASU engineer works to develop a portable brain-like computer

Jae-sun Seo

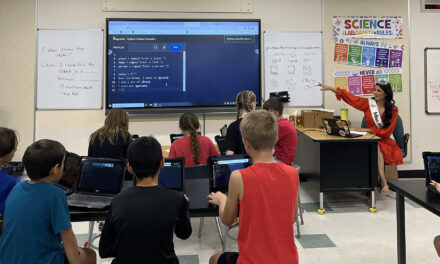

Many of our portable devices today work with advanced voice or image features, but your personal Siri or Google Photos app can’t process speech or image recognition solely on your smartphone’s hardware.

But what if speech and image recognition and other complex cognitive tasks could all be performed on a single portable device without an internet connection and high-power servers behind the scenes?

Jae-sun Seo is attempting to shatter the computing, energy and size limitations of state-of-the-art learning algorithms to fit on small footprint devices with the help of custom-designed hardware.

This research caught the attention of the National Science Foundation and earned Seo, an assistant professor of electrical engineering in Arizona State University’s Ira A. Fulton Schools of Engineering, a five-year, nearly $473,000 CAREER Award.

“The overarching goal of this project is to build brain-inspired intelligent computing systems using custom hardware designs that are energy-efficient and programmable for various cognitive tasks, including autonomous driving, speech and biomedical applications,” Seo says.

In order to reduce the resources required by learning algorithms, Seo looks to the human brain to mimic its ability to selectively and adaptively learn and recognize real-world data, dispensing with the redundant computations and exhaustive searches current algorithms employ.

Seo also aims to investigate low-power, real-time on-chip learning methods; novel memory compression schemes for software and hardware design; efficient on-chip power management capable of adapting to abrupt changes in cognitive workloads; and cross-layer optimization of circuits, architectures and algorithms.

“The outcomes of this research will feature new very-large-scale integration systems that can learn and perform cognitive tasks in real-time with superior energy efficiency, opening up possibilities for ubiquitous intelligence in small-form-factor devices,” Seo says.

Seo believes his overall research goal for ultra-energy-efficient intelligent hardware that integrates a synergistic set of tasks spanning algorithms, circuits and architectures piqued the NSF’s interest.

“In addition to covering a wide range of disciplines from bio-inspired models to memory compression to power management, I articulated possible solutions for addressing the design challenges brought by the computation- and memory-intensive nature of state-of-the-art deep neural networks,” Seo says of his proposal.

Collaboration between Fulton Schools faculty and the high-performance computing resources of ASU Research Computing helped make this interdisciplinary research possible, Seo says.