Dirty, dull or dangerous: Using AI to teach robots to do the jobs we don’t want

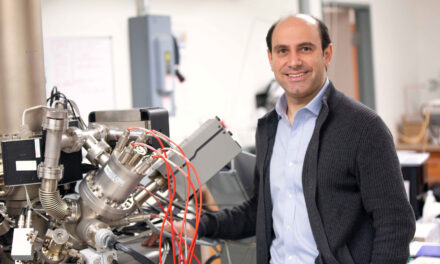

Siddharth Srivastava to supercharge robotics with new toolkit

Fantasy writer Joanna Maciejewska recently took to the social media site X to opine, “I want AI to do my laundry and dishes so that I can do art and writing, not for AI to do my art and writing so that I can do my laundry and dishes.” Her statement struck a chord, receiving more than three million views and 100,000 likes.

The writer was expressing a collective frustration with the growing buzz surrounding artificial intelligence, or AI. This new technology is everywhere. Every day people are using tools like Midjourney to generate eye-popping artwork in seconds. Educators fear students are tapping into tools like ChatGPT to write their homework assignments.

But the promise of computer science should be that machines take the jobs we don’t want — giving humankind more time and freedom to dream and imagine.

Siddharth Srivastava is taking that message to heart. He is an associate professor of computer science and engineering in the School of Computing and Augmented Intelligence, part of the Ira A. Fulton Schools of Engineering at Arizona State University and also heads up the Autonomous Agents and Intelligent Robots Lab, or AAIR Lab.

In the AAIR Lab, a center where robotics and AI research intersect, Srivastava and his team of doctoral students are hard at work, studying how to develop intelligent robots that can complete complex tasks under uncertain conditions.

“We must find ways to get robots to do those jobs that are dirty, dull or dangerous,” Srivastava says. “We want them to do the kinds of jobs people would prefer to avoid.”

However, new technology is required because robots must learn to work on their own.

Funded by multiple grants from the National Science Foundation, Srivastava and his team will create AI solutions in robotics. The resulting toolkit will combine new algorithms, or sets of instructions that robots use to do their work, with language data and real-world models. These algorithms will enable a robot to use its own experiences to invent reusable, high-level actions. It can then formulate new plans for solving more complex tasks without more input from a user.

Srivastava explains that robots have been capable of helpful and advanced activities for a long time. He notes that robots are used to help assemble cars in automotive factories and make operations easier and faster at companies like Amazon.

“But those systems require expert-level coding and hand-engineering,” Srivastava says. “Robots must be pre-programmed with exact information about the task that needs to be completed, how it should be completed and the kind of space the robot is operating in.”

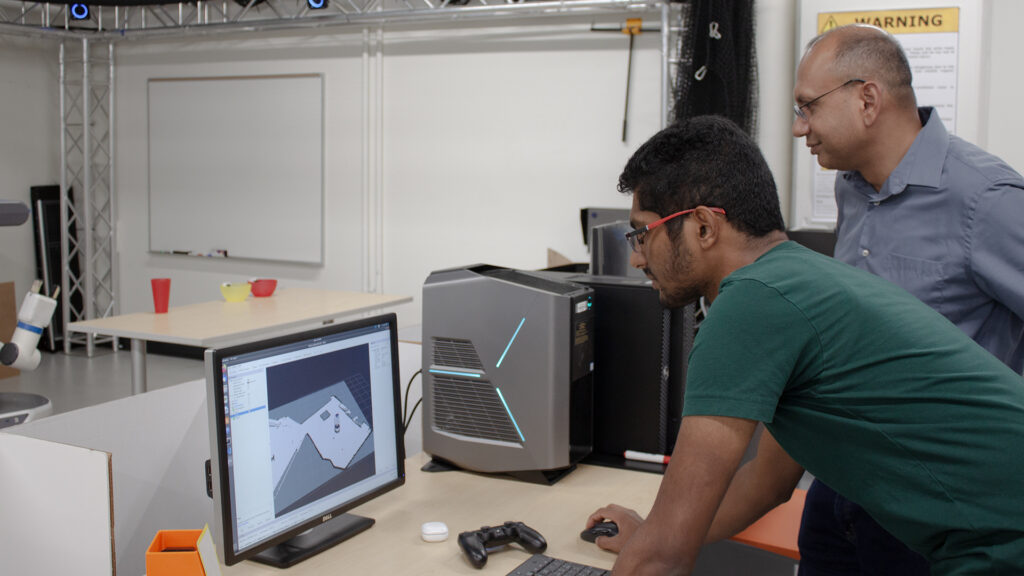

Srivastava (far right) and Nagpal (left) observe Alfred’s efforts to plan the route it will take to successfully clear dishes off a table without any programming done in advance about the working area. Photographer: Kelly deVos/ASU

The need to tell a robot exactly how to weld two car parts together or exactly where a pallet of books can be found in an Amazon warehouse makes developing and deploying these systems difficult and expensive. The current development process keeps robots out of many settings where their use would be desirable.

Srivastava considers the challenge of giving a robot high-level instructions, which are a set of directions in simple, plain language. For instance, what if we need a robot to serve coffee?

“If I ask a robot to bring me coffee, I don’t want it to show up with coffee beans,” Srivastava says. “I don’t want it to run into my family. And I don’t want it to set my house on fire in the process of brewing my drink.”

In the AAIR Lab, the team is testing their work using a Fetch mobile manipulator robot from computing hardware and software company Zebra they have named Alfred.

Currently, Alfred is learning to clear the dishes after a meal. Alfred was given a demonstration on how to pick up and place down a cup and a bowl. Srivastava’s algorithms then get to work, allowing Alfred to develop its own logical theory of the world, coming to understand the reuseable actions required to repeatedly move the dishes. The process allows the robot to autonomously create knowledge that would otherwise need to be hand-coded by experts.

With that knowledge in place, Alfred makes its own decisions on what route to take, how to move its arms and where to place the dishes.

“The task of setting a table with hand-coded primitives has been done before,” Srivastava says. “The main technical innovation here is that the robot learns on its own how to generalize.”

This new process will accomplish two things. First, it will allow the robot to operate in any area, without an engineer pre-programming information about the space. Second, it will make the deployment of robots substantially less expensive and less time-consuming as it will reduce the amount of manual programming work required.

Srivastava and his team see many possible applications of this new technology and are especially looking at uses in the medical sector, including the possibility of robots that can clean hospital rooms. Since the robot could learn on its own to recognize different room configurations and develop a plan to clean the area, the team’s technology has the potential to improve hospital operations.

The researcher also believes that the toolkit could be of great use in disaster-recovery support systems where it is impossible to know in advance what kind of conditions might be encountered.

Prior to beginning this new work, Srivastava was already contemplating the type of new AI-enhanced technology people like Maciejewska dream of. He was part of a research team developing a robot that could automatically do laundry.

“My family is eager for me to finish this project and get back to the laundry issue,” he jokes.