ASU team taking concept for closer human-robot connection to U.S. Imagine Cup finals

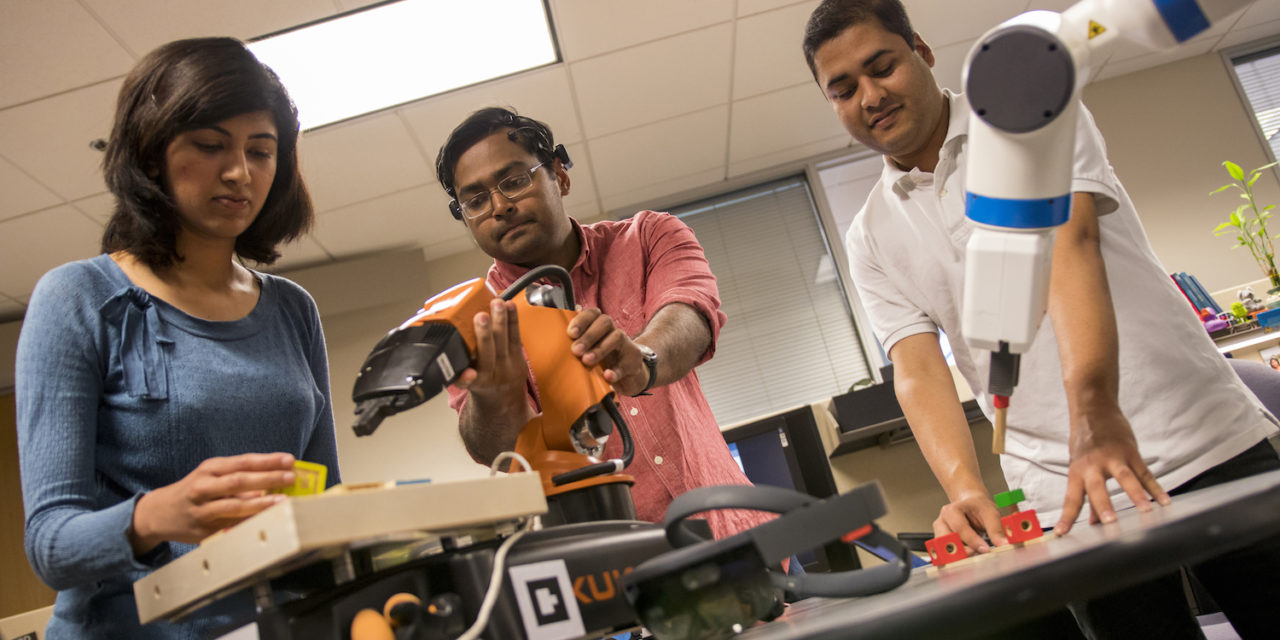

Above: Computer science doctoral students (left to right) Anagha Kulkarni, Sarath Sreedharan and Tathagata Chakraborti have combined aspects of robotics, artificial intelligence, cognitive neuroscience and virtual-reality technology in their project for the Microsoft Imagine Cup competition. Photographer: Marco-Alexis Chaira/ASU.

A project designed to “bridge the communication gap” between humans and robots is among contenders in the U.S. Finals of the 2017 Microsoft Imagine Cup, the international student competition that’s considered the “Olympics of Technology.”

It’s the work of three graduate students pursuing doctoral degrees in computer science at Arizona State University’s Ira A. Fulton Schools of Engineering.

They make up the team named Æffective Robotics, one of 12 to earn a spot in the Imagine Cup U.S. Finals April 18–22 in Seattle. The six top-performing teams will go on to compete in the Imagine Cup Worldwide Finals, also in Seattle, in July.

The first-place team in the Worldwide Finals will be awarded $100,000, plus support to launch a startup based on its project.

For each of the past several years about 350,000 students from more than 170 countries and regions have entered the competition. This year about 3,000 students at colleges and universities in the United States were on teams that competed to go to U.S. Finals.

After several months of preparation on the project they’ve titled “Cloudy with a Chance of Synergy,” the Æffective Robotics members say they are confident about making a strong showing at the national event.

The Æffective Robotics team members with their mentor,Subbarao Kambhampati, a professor of computer science and engineering. Photographer: Marco-Alexis Chaira/ASU.

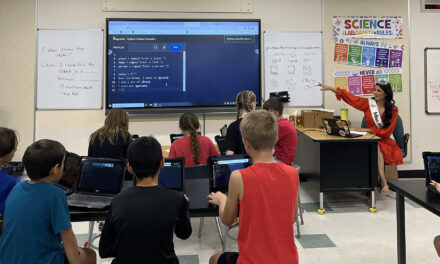

Tathagata Chakraborti, Anagha Kulkarni and Sarath Sreedharan will be presenting a concept — along with software — for the operations of a “factory of the future,” in which robots and humans would be connected through a networking system enabling them to effectively “share a brain.”

The humans and robots would not actually be reading each other’s minds, Chakraborti explains. But they would have technology that provides platforms for a “mutually understood vocabulary” and for “intention recognition and projection,” allowing everyone and everything connected to the network to anticipate each other’s physical movements and high-level goals, and to comprehend the intentions and motivations behind those movements.

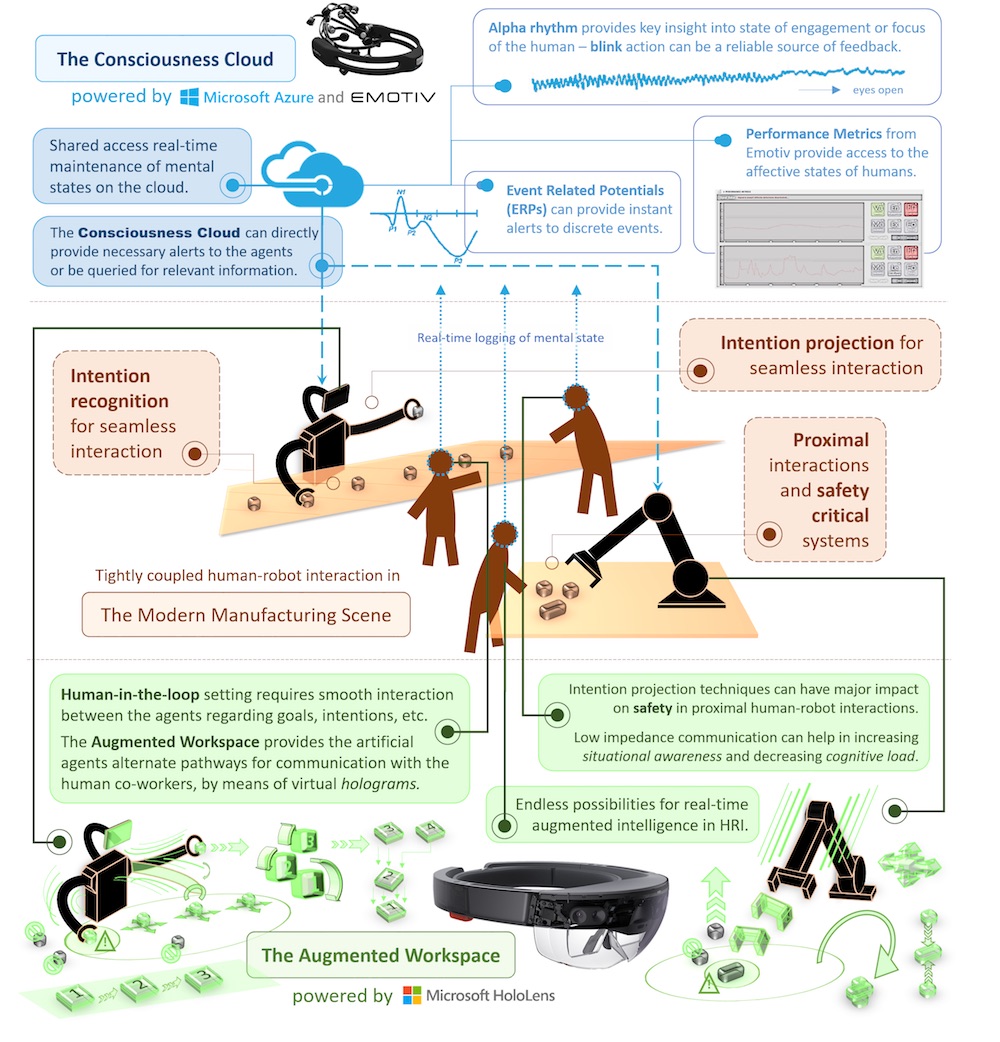

It is all achieved through what team members describe as a Consciousness Cloud that would give the robots working in the factory “real-time shared access to the mental state of all humans in the workplace.”

The humans would wear helmets containing technology linking them into that cloud.

The network system is enhanced by an Augmented Workspace scheme in which humans and robots communicate and interact through an integration of real and virtual environments using holograms (3D images created with photographic projection).

Workers would wear the Microsoft HoloLens, described as mixed-reality “smart glasses,” to enter into that virtual-reality space.

The setup would allow people to not only infer the intent of robots’ actions through looking at holographic projections, Chakraborti explains, but could also communicate their own intentions to the factory’s robots.

A robot and a human would be able to understand “what the other intends to do and why it is doing that thing in a particular way,” he says.

The overall concept is embedded in the team’s name.

ÆRobotics is short for Æffective Robotics. “Affective” refers to mental modeling and brain waves. “Effective” refers to the holographic vocabulary for effective communication between robots and humans in the augmented-reality loop.

The illustration shows the schematic for a Consciousness Cloud and an Augmented Workspace system designed to enable humans and robots to communicate and coordinate operations on a manufacturing assembly line.

The team members say that if their idea were turned into reality it would open up a plethora of possibilities for productive human-robot interactions in myriad endeavors beyond a factory assembly line and the manufacturing industry.

For the Imagine Cup competition, the team has submitted a video demonstrating how the Æffective Robotics system would work.

The team will be demonstrating their project in the Seattle Art Museum on Wednesday, April 19, 2017 as part of a science-fair-style exhibition, followed by a pitch presentation and a project demonstration before Imagine Cup judges on Thursday, April 20, 2017.

The project evolved out of research Chakraborti, Kulkarni and Sreedharan are doing as part of their doctoral studies under the direction of Subbarao Kambhampati, a Fulton Schools professor of computer science and engineering.

As members of his Yochan Research Group (Yochan means “thinking” or “reasoning” in Sanskrit), they are developing human-aware artificial intelligence systems that can support fluid teaming of robots and people.

“For far too long, AI systems have focused on working autonomously without interacting with humans,” Kambhampati says. “This team’s Imagine Cup project is at the forefront of research aimed at augmenting productivity by making machines work seamlessly with humans.”

Kambhampati is the team’s mentor, but the students have also been guided by Yu Zhang and Heni Ben Amor, both Fulton Schools assistant professors of computer science and engineering with broad expertise in human-robot interaction, artificial intelligence and machine learning.

In addition, they’ve been aided by a robot lent to them by Professor Baoxin Li’s computer science and engineering research group and by the cognitive neuroscience expertise of Chris Blais, an assistant research professor of psychology in ASU’s College of Liberal Arts and Sciences and Barrett, the Honors College.

Whatever the outcome of the team’s performance in the Imagine Cup competition, they agree that, as Kulkarni says, “This has been really fun.”