The state of artificial creativity

AI experts in the School of Computing and Augmented Intelligence share their thoughts on content-generating technologies

If you have been on social media in the past month, you may have been tempted to submit your photo to a content-generating website to see yourself transformed into a futuristic cyborg, a pop-art portrait or a mystical woodland creature. Or, you may have explored the depths of your imagination to pull the most obscure themes you could think of to see what image the technology could dream up.

With the help of artificial intelligence, or AI, all of this creativity is possible and accessible at the click of a button.

Content-generating AI technologies have kickstarted a global conversation, with tools like Lensa AI, DALL·E, ChatGPT and GPT-3 dominating headlines. While these platforms are helping users access their inner artists and writers, they are also sparking a heated debate across a range of topics from privacy to academia to national security.

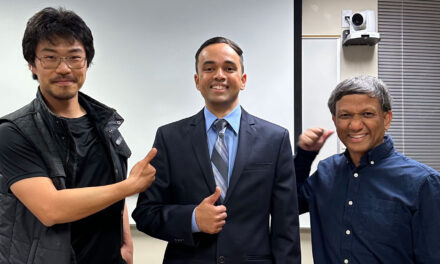

Full Circle spoke to AI experts in the School of Computing and Augmented Intelligence, part of the Ira A. Fulton Schools of Engineering at Arizona State University, to get their perspectives on these technologies.

What is content-generating AI?

Chitta Baral, a professor of computer science and engineering, believes it is important to step back and analyze this technology by “taking the ‘A’ out of ‘AI.’”

“When we just look at intelligence, we’re trying to simulate or mimic human intelligence and achieve the same result in an artifact, robot or program,” Baral says. “The main themes of intelligence are if these tools can learn, reason and understand, which we are constantly striving for in the AI community.”

Lensa AI and DALL·E are examples of tools that generate images. Lensa AI reimagines and edits existing photos submitted by a user and DALL·E uses its own content database to generate unique images based on a brief description provided by the user.

Generative pre-trained transformer, known as GPT-3, uses deep learning and natural language processing, or NLP, to write human-like text. Examples of this have been seen in a broad range of applications from recipe writing to producing a scientific research paper using Meta’s Galactica tool — a practice that faced immediate backlash and was removed due to concerns about accuracy and misinformation.

Leaders from ASU’s Enterprise Technology team also weighed in on the advancement of these technologies.

“AI is already being used on campus successfully to support learners with tasks such as financial aid guidance through services like Sunny, ASU’s AI chatbot,” says Allison Hall, senior director of learning experience. “The question now is how these kinds of advancements in generative AI can be leveraged to further assist learners, as well as faculty and staff, to accelerate progress without sacrificing the undeniable need for human-driven critical thinking.”

Donna Kidwell, chief information security and digital trust officer at ASU, notes that generative AI has quickly entered the market after years of work on the part of many researchers.

“ASU has a unique opportunity to help shape the use of AI for our learners, faculty, researchers and the broader community by thoughtfully addressing policy approaches and how we think about ethics and digital trust,” she says. “Transparent and open dialogues will help us harness generative AI to unleash creativity, while ensuring folks can trust what they see and get credit for their contributions.”

Baral says what AI still lacks is context and reasoning, factors that are crucial in elevating these technologies to the next level of content-generating capabilities.

“Most of the time, AI-generated information makes sense, but the human brain can discern when context is missing and is able to tell this content apart from human-generated content,” he says.

Baral notes that holes in communication generated by AI technologies can lead to misunderstanding and miscommunication, resulting in material that can potentially be problematic or insensitive.

Addressing concerns

Subbarao Kambhampati, a professor of computer science and nationally renowned AI thought leader, believes the risk that image-generating tools pose when they are in the wrong hands should be alarming to the general public.

He notes that while Lensa AI, DALL·E, GPT-3 and, more recently, ChatGPT are receiving national attention, they are among dozens of similar tools, some of which are not supervised. Many of these platforms take an open source approach, like Stable Diffusion, allowing the users to run the systems on their personal machines.

“While DALL·E has a filter to remove any violent, sexist or racist prompts, many other AI tools do not have regulations in place,” Kambhampati says. “There is no limit to the upsetting, potentially dangerous or explicit content that can be created. Once Pandora’s box is open, it can be very challenging to get the lid back on.”

Outside of creating generally misleading or offensive photos, Kambhampati says the lack of regulated images also has the potential to create political tension and even to go as far as create national security risks. He says some of the images appear so realistic that it is nearly impossible to tell them apart from authentic photos. The same has been seen in deepfakes, which use a kind of AI called deep learning to alter videos and fabricate events.

For example, Kambhampati explains that AI tools could generate images of political leaders appearing to share confidential documents, shaking hands with controversial business executives or having seemingly cheerful conversations with disputed politicians. This material could have the power to impact elections or alter relationships between sparring countries.

On the creative end of the spectrum, AI-generated artwork is winning national competitions and artists’ work is being used without their knowledge to train AI algorithms, causing an uproar among working artists.

Among the creative community, impacts of AI advancements are raising concerns that the roles of artists and writers could become obsolete. YooJung Choi, an assistant professor of computer science, believes there will always be room for humans in the creative world. She compares the phenomenon to the creation of the camera, which she notes did not destroy the art of painting but only evolved it from hyper-realism to modern art — a form cameras were not able to replicate. Anticipating a similar evolution for artists and writers, we must wait and see what they bring to the table that AI cannot.

Future advancement

As AI technologies continue to improve, countries are taking notice and passing legislation to establish more consistent regulations.

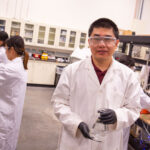

In the meantime, researchers like Baoxin Li, a professor of computer science, are addressing solutions to verify AI-generated content.

“Most fake media out there is fairly easy to detect, but the issue is that the technology is getting better and better,” he says. “When countries are investing funding and manpower in producing this content for terrorism-like activity and the average person can’t tell the difference, it becomes a very serious issue.”

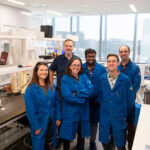

Li and his visual representation and processing student research group are exploring the defense angle of image verification, building technologies to define and detect AI-generated content.

Looking at machine learning and AI systems, Li and his team are also researching how to make these systems more robust. By improving these systems’ ability to identify fake images on their own, Li can embed an added layer of defense if potential attacks go undetected.

“An example of this could be someone using a deepfake or AI-generated image to try to gain access through facial recognition software,” he says. “With teams to actively defend against adversarial attacks and mechanisms in place to make systems less vulnerable, attacks are harder to accomplish.”

Although there are important concerns to address as AI technologies continue to advance, AI experts in the School of Computing and Augmented Intelligence also see great potential.

“If used in the right way, AI can help bridge the gap between anything humans can imagine in our wildest dreams and the ability to make it a reality,” Kambhampati says.