Fulton Schools faculty win award with innovative “person-centered” multimedia paradigm

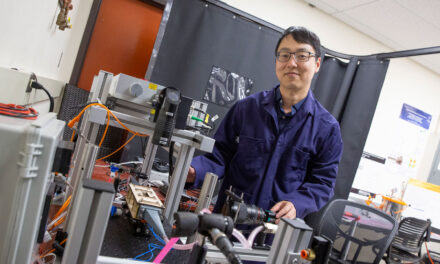

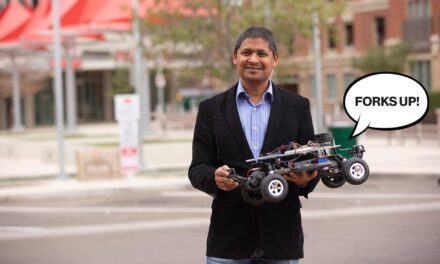

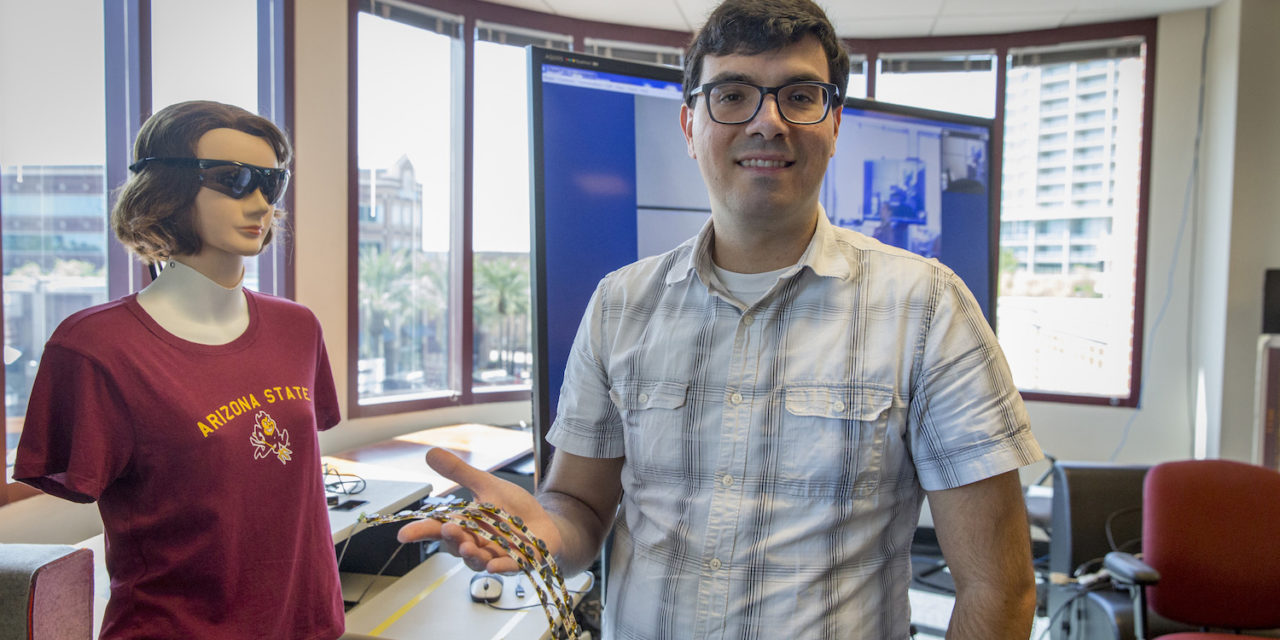

Above: Troy McDaniel shows off the haptic devices that can be placed on a chair to deliver vibration patterns. Photographer: Jessica Hochreiter/ASU

When you update the software on your phone, it takes some effort to adapt to changes to the software you have become so used to. You have to learn how it works, but why shouldn’t the technology adapt to you as well?

A team of researchers in Arizona State University’s Ira A. Fulton Schools of Engineering set out to make this a reality by creating adaptive assistive computing technology.

This work caught the attention of the Institute of Electrical and Electronics Engineers, which presented three ASU faculty members and one ASU postdoctoral researcher the 2017 IEEE MultiMedia Best Department Article Award on October 26, 2017 at the 25th ACM Multimedia conference in Mountain View, California at the Computer History Museum. Executive Vice President of ASU Knowledge Enterprise Development Sethuraman Panchanathan, Assistant Professor Shayok Chakraborty of Florida State University (formerly an Assistant Research Professor at ASU), Assistant Research Professor Troy McDaniel and Postdoctoral Research Associate Ramin Tadayon were the minds behind the award-winning article titled, “Person-centered multimedia computing: a new paradigm inspired by assistive and rehabilitative applications.”

The paper introduced two applications within a new paradigm developed by Sethuraman Panchanathan, the director of the Center for Cognitive Ubiquitous Computing, also known as CUbiC, called “person-centered multimedia computing.”

“It’s a paradigm that proposes ideal technological designs as individualized designs: technology that adapts to its users over time through continual use,” McDaniel said.

McDaniel, alongside Panchanathan, Chakraborty and Tadayon, focused on applying the new model to social assistive aids for individuals who are blind, and rehabilitative technologies for individuals who have suffered strokes.

“Designing for individuals with disabilities necessitates individualized designs because no two disabilities are the same,” McDaniel said. “The proposed paradigm stems from this need for person-centered designs.”

CUbiC’s core research in multimedia computing, machine learning, computer vision and haptics is driven by application areas of assistive, rehabilitative and healthcare technologies for individuals with disabilities.

“The challenge with individualized designs is that we could potentially spend a lot of time and energy building and fine-tuning a technology for a specific user, but what if someone else wants to use it?” McDaniel asked. “How do we find the balance to ensure solutions can generalize to other users?”

The researchers addressed this concern by exploring coadaptive algorithms, which allow both the person and the machine to adapt to each other over time.

“That’s what person-centeredness is all about — the technology adapting to you so that it’s perfectly suited for you,” McDaniel said.

One application of the new paradigm is the Social Interaction Assistant, an assistive device that allows individuals who are blind access to nonverbal social cues, such as facial expressions. A body-worn or tabletop webcam would use machine learning and computer vision algorithms to recognize nonverbal cues of interaction partners as well as haptic, touch-based delivery devices for discreet, personal communication to an individual who is blind.

“The presentation part is difficult: you don’t want to obstruct a user’s hearing because then they can’t hear their partner speak,” McDaniel said. “So, we try to convey as much information as possible through the sense of touch. We can do that using, for example, vibrations. By exploring vibration patterns that vary both spatially and temporally, and haptic devices capable of delivering these cues, we have found a set of patterns that intuitively convey something as complex as facial expressions and emotions.”

Coadaptive algorithms can also be used to improve accuracy of machine learning and computer vision algorithms, like the ones used in this technology, by considering context and the behavior of the user.

A year after the article was published in August 2016, it won the Best Department Article award, assuring the researchers that they were on the right track.

“My impression is that people are recognizing the work and identifying something that’s an important and useful contribution,” McDaniel said.

McDaniel’s team is now applying person-centered multimedia computing to other applications.

“As we investigate more projects here in CUbiC, we’re developing them under this paradigm because it’s such a powerful approach,” McDaniel said. “It has inspired not only our current work but much of our planned future work as well, so we’re continuing to explore different applications that we can apply this paradigm to in a useful way.”