ASU student develops sensing technology for visually, hearing impaired

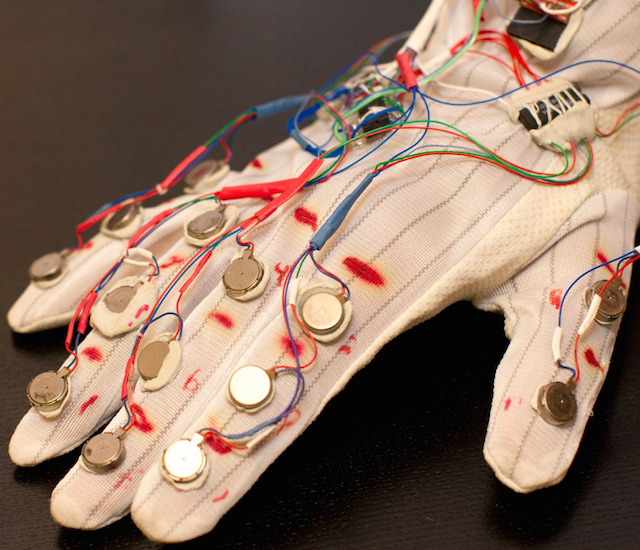

A sensing glove was the first device ASU graduate Shantanu Bala worked on at ASU’s Center for Cognitive Ubiquitous Computing (CUbiC). A camera picks up a person’s facial expression and the glove provides different sensations based off what the camera sees. Photo by: Center for Cognitive Ubiquitous Computing

A visually-impaired man can’t see the interviewer sitting across from him but knows that the questioner has a smile on his face.

Motors in the visually-impaired person’s chair give the sensation of having a shape – a smile – drawn on his back.

“We use that as a way to communicate the face,” said Shantanu Bala, an Arizona State University graduate.

Vision through chair massage.

Bala, who developed the new technology while at ASU, is helping people with visual and hearing disabilities to know what they can’t sense.

Here’s how it works: the visually impaired person sits in a chair with different motors on it. A camera captures the facial movements of the interviewer, and that information is translated into sensations on the person’s back, allowing them to feel the movement of somebody’s face.

“It’s for the most part hidden,” Bala said. “You might be able to hear a small buzz of different motors. One of the goals we had was to make it as discreet as possible.”

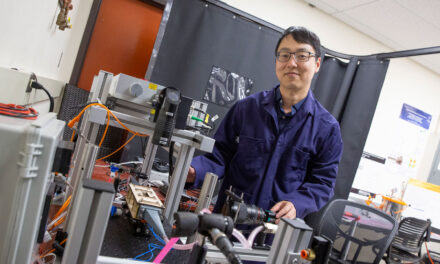

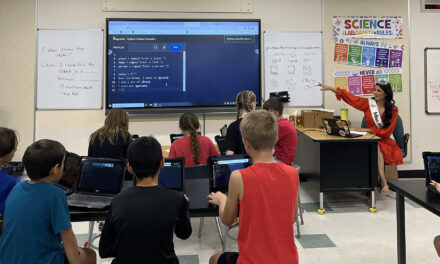

Bala, who double majored in psychology and computer science, has worked with ASU’s Center for Cognitive Ubiquitous Computing (CUbiC) for six years on projects that help provide people with hearing or visual disabilities with situational context.

The first device he worked on involved a glove that would provide different sensations on a person’s hand depending on what the computer camera picked up from the speaker.

“If another person is smiling, you actually kind of feel a smile on the back of your hand,” Bala said, drawing a smile on his hand. “That’s a way to take emotions and turn them into something a person with a visual disability can interpret as they’re talking to someone.”

Working on these devices helped him win the Thiel Fellowship, a program that provides finances, resources and mentors for its fellows to accomplish their projects. During his two-year Thiel Fellowship, Bala said he will work on developing these research projects’ devices into patentable devices or products to be sold in stores.

In the last six years, the center has been working on different prototypes of social interaction assistants. People with a visual disability are able to hear a conversation, but they don’t experience the details of the conversation, such as the person’s facial expression and body language, Bala said.

Troy McDaniel, associate director at the center, has worked with Bala since he came to the center as a high school student. He said Bala has grown professionally during the past few years.

“When I interact with him, I have to remind myself he’s an undergraduate because he’s working at a higher level,” McDaniel said.

Bala has been part of the research on sensitive touch-to-communicate information for five years, McDaniel said. At the end of his sophomore year in high school, Bala decided to approach the center to see if there were any opportunities for him.

“I went to them and said, ‘Hey I have some free time. I want to volunteer here,’” Bala said.

He implemented the software that captured the information for the glove device. Bala said they use a computer camera to catch the movements of a person talking, but they plan on using a smartphone in the future.

He has also helped build a chair that provided a similar sensation, but on the person’s back. Bala designed the mapping of the haptic representations, implemented the software and conducted a user study, McDaniel said. Because of the larger space, the user would be able to sense drawings that conveyed the entire movement of a person’s face, from their nose to eyebrows.

While the device has been used to convey emotions in a conversation, Bala said this technology could be used in a multitude of situations.

“You could take any image or anything that’s visual and turn it into something someone with a visual disability can feel on their skin,” Bala said.

He is currently working on the same concept with Austin Butts, a biomedical engineering graduate student. Butts’ thesis is applying the concept to hearing disabilities to help hearing impaired people gain situational awareness. Bala is trying to see if they can take sounds picked up from a microphone and turn them into something a person could feel on his or her skin.

“The dynamic we have working together is pretty good because we both have different areas of expertise,” Butts said.

Bala also wants to discover how relaying information through this concept can be used in other areas of life during his two-year Thiel Fellowship.

“There’s a lot of technology that’s there that’s still kind of unexplored, so we don’t know how to really use it, and that’s the main thing I want to try and figure out,” Bala said. “Is there a use for it outside of this small context?”

As part of the Thiel Fellowship, he’ll commute to the San Francisco Bay Area, but will complete the majority of his work at ASU.

Written by Alicia Canales