When engineering gets eerie

Progress in science and engineering isn’t without its pitfalls. Labs can feel like they’re working against their inhabitants, student projects take odd twists and turns, career paths are all but smooth and the route to success is perilous.

Robotics is a risky business when a fire breaks out in the lab — but the flames aren’t the scariest part.

On a warm Sunday evening in October, a small electrical fire ignited in a computer monitor at Arizona State University’s Brickyard building. Although the flames weren’t nearly as terrifying as the building’s fire sprinkler system.

Several computer science and engineering students were working in the building as the shrieking fire alarm sounded and prompted evacuation. The rising temperature from the fire triggered the sprinkler system to extinguish and contain the flames.

Once a safe distance from the building, the students feared for the safety of the robots in the building and immediately notified Yezhou Yang, an assistant professor of computer science and engineering.

“There are a bunch of intelligent robots from my lab in the building along with other robots from my colleagues’ labs,” says Yang. “I worried our robots were subjected to water damage from the fire sprinkler system.”

Yang’s 25-minute drive to campus was filled with thoughts of the robots short-circuiting, which could cause catastrophic damage.

“I was terrified because of how much time we spent working on these robots,” says Yang. “Thousands and thousands of hours from students and faculty went into building them.”

When Yang arrived, the flooding was significant on the second and third floor of the building, but all the robots were still intact.

“It was a spooky experience for a robotics engineer,” says Yang.

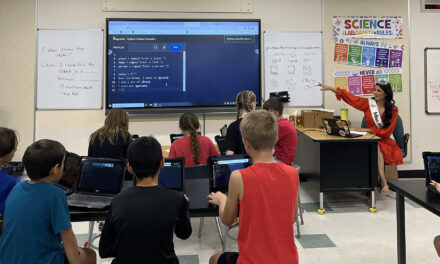

An industrial engineer bites off more than she can chew on a daunting class project.

Industrial engineering senior Michaela Starkey wasn’t prepared for the horrors of racing the clock with the fate of her grade in a hospital’s hands.

Last year, as a junior embarking on her first real-world project for the Work Analysis and Design course, Starkey already had a daunting task ahead of her.

“We were supposed to team up in groups of three and go find our own project for the semester,” Starkey said. “It’s no easy feat convincing a company to let you come into their establishment and tell them what they need to fix and how to fix it.”

But Starkey and her team decided it was time to go big or go home, and big ambitions would mean big opportunity to impress on their résumés. They aimed to help a major hospital in Arizona.

Time is an important factor on engineering class projects, with deliverables due throughout the semester. It took a couple months to even get their project accepted by the hospital, and it only got slower from there.

First, there was the lengthy onboarding process. Then they had to hunt down their vaccination records and get new vaccinations to be able to work closely with patients. By the time they could finally start, two main deliverable deadlines had already passed.

However, they were prepared for the horrors of working on such an ambitious project. They had the support of their professor who was excited by the prospect of the first industrial engineering student group to work with the executive board of a major company.

“We powered through the hurdles, kept our professor in the loop, asked permission before we did almost anything that involved missing deadlines and generally worked very hard,” Starkey said.

She warns students to not bite off more than they can chew, but communicating with your professor can help bring your grade back from the dead.

A graduate student in biomedical engineering finds himself in the wrong place at the right time.

One night, Scott Boege was practicing a presentation for a job interview that would take place the next day. He set alarms to ensure he’d have time to arrive early and make a good impression. He confirmed with a colleague also interviewing with the company where the interview was going to be. He arrived early. It was all going according to plan.

But when he got there, the biomedical engineering graduate student walked around looking for the conference room and couldn’t find it. He couldn’t even find a map or any people working there.

Boege checked with his colleague again, who paused, then delivered the horrific news: He was actually in the wrong building and needed to be on the other side of town in 10 minutes!

“I hopped into my car and drove as fast as I (legally) could to the interview,” Boege said. “I arrived right on time for the interview. I was shook.”

While it can be terrifying enough to interview for a job, it’s even scarier when you were just running for your career.

“My heart was racing and I didn’t interview to the best of my ability, which has a bigger impact on your interview than minor details in a presentation,” Boege said. “I ended up getting hired though, so that was a relief.”

While it’s important to practice for an interview presentation, it’s also important to confirm your interview details so you’re not in the wrong place at the right time!

Psychology is the science behind what makes horror stories scary, but in this story adapted from a book by Nancy Cooke, a human systems engineering professor, and her colleague, psychology and cognitive science prevent the human horrors of technological failure.

Thump, thump, thump — the December 28, 1973, United flight 173 from JFK airport to Portland was going well until the landing gear lowered with an odd sound and yaw to the right.

The captain and first officer had about an hour of flying time left in the tank to figure out the problem as they approached Portland. They got to work discussing the problem between themselves and with Portland airport personnel and United Airlines maintenance.

At the same time, on the ground, young professor Bob Helmreich was writing a paper about the psychology of small groups, such as crews performing under the crisis of a landing gear failure.

A crisis was building on United flight 173 as they were no closer to solving the landing gear problem.

The plane lost an engine, then another. They were out of fuel.

Six miles east-southeast of the airport, they crashed.

The National Transportation Safety Board determined the crash due to empty fuel tanks was caused by ineffective communications among the crew about their lack of fuel and the urgency of the situation.

Hemreich’s crew psychology paper and the United flight 173 crash would be the catalyst for what is now called crew resource management, or CRM, a practice solidified by human factors researchers observing crew interactions in flight and their outcomes.

“Errors made by the crew become threats they have to manage, just like they have to manage problems not of their doing such as weather and mechanical breakdowns,” writes Nancy Cooke, a human systems engineering professor in the Fulton Schools, with her colleague Frank Durso, a psychology professor at the Georgia Institute of Technology.

Learning how flight crews can manage failures and mistakes through effective group communication is the basis for CRM.

“Cognitive engineers have also developed markers that allow trained observers to distinguish a functioning teammate from a dysfunctional one, the skilled from the unskilled, the good from the bad,” Cooke and Durso write. “CRM is a prime example of how a demonstrable success can arise from good ideas taken from one domain and applied to another.”

Cooke studies individual and team cognition. Ongoing projects in her research group include human-robot teaming and coordination of human-autonomy teams in the face of unexpected events.

In Stories of Modern Technology Failures and Cognitive Engineering Successes, Cooke and Durso explore real examples of technological failures and how the fields of cognitive science and human systems engineering can prevent such horrors from happening. In one case, applied cognitive science and engineering created a solution to prevent nightmare fuel scenarios from happening in the skies.

Read about more ways cognitive engineering helps identify problems and implement successful solutions in Stories of Modern Technology Failures and Cognitive Engineering Successes.