Robotic autonomous cars teach whole system design

Computing doesn’t happen in isolation. Today’s computing systems, like those in cars and appliances, are increasingly intertwined between software, electronics and mechanical components. As computing becomes more integrated in everyday objects, engineering students need a hands-on, comprehensive approach to understand how these parts interact.

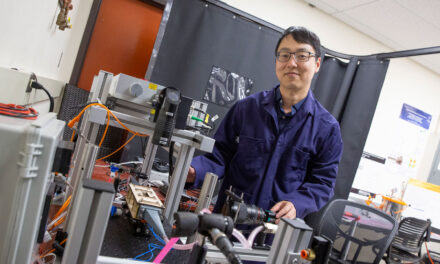

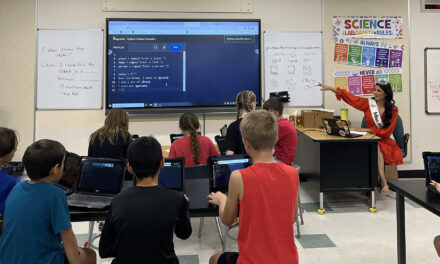

Computer science and engineering Associate Professor Aviral Shrivastava identified autonomously driving vehicles — robot cars — as a way to teach the complexity of today’s computing systems to his students.

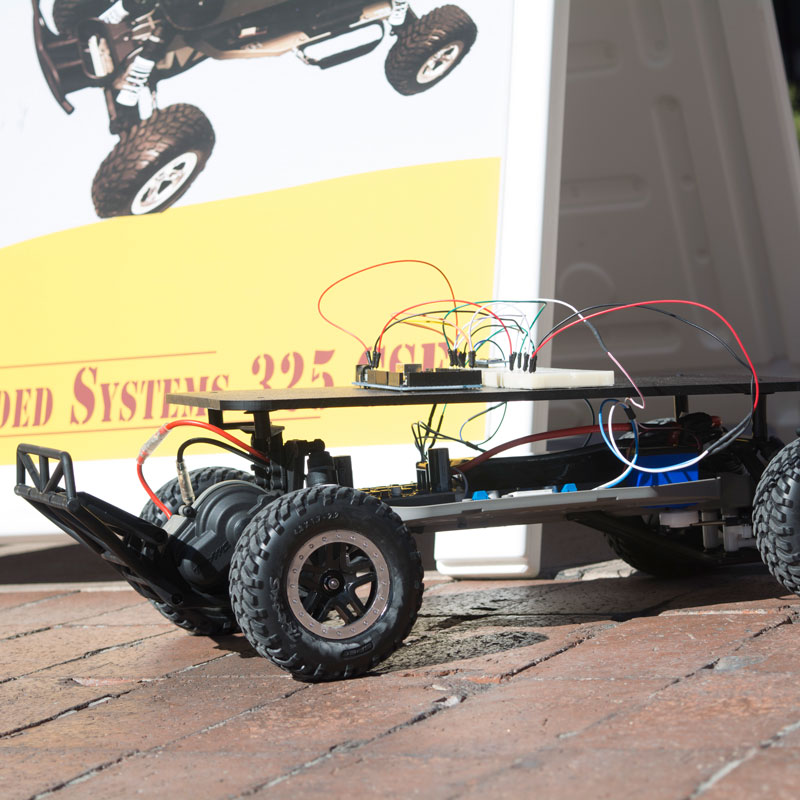

Students in computer systems engineering class Embedded Microprocessor Systems test their self-navigating cars in the first of four demonstrations. The class was redesigned this semester by Associate Professor Aviral Shrivastava to teach the complexity of modern embedded systems through robotic model cars. Photographer: Mihir Bhatt/ASU

Keeping up with the computing times

Last fall, Shrivastava noticed that the required embedded systems course he would teach this spring, Embedded Microprocessor Systems (CSE 325), didn’t prepare students to work with modern embedded systems — computing systems incorporated into electronic devices beyond personal computers. The purely computing systems of old no longer cut it. More complex cyber-physical systems are the new normal.

“Cyber-physical systems are systems that have both a computing aspect and a physical or real-world aspect,” Shrivastava says. “The key difference from embedded systems comes from the fact that when designing embedded systems you are concerned only about the computing aspect of the problem, but in cyber-physical systems you need to understand the physical aspects to be able to design the system correctly.”

A computer systems engineering student gets ready to test his team’s car on the ability to drive in a figure 8 given the diameter of the two circles on April 1 in the Brickyard courtyard. Photographer: Mihir Bhatt/ASU

Robotic cars teach system-level design

Autonomous cars are one high-profile example of a cyber-physical system that includes many of the embedded system concepts computer systems engineering students need to learn and represents an exciting and growing industry. Scale that down to a semester-length project, and students in Shrivastava’s class get hands-on experience building a complex system through a robotic toy car they learn to build from scratch.

Computer engineering graduate student Edward Andert, who is a graduate teaching assistant for the Embedded Microprocessor Systems class, built a small robotic autonomous car for his undergraduate capstone project in 2013. That became the basis for the project this semester’s 50 computer systems engineering students are building in class.

It’s an involved assignment that has kept the bar high in a traditionally difficult computer systems engineering class. Students get the car chassis, batteries, wires, Arduino Mega microcontroller board, drive and steering motors, and sensors — inertial measurement unit (IMU), global positioning system (GPS) and Light Detection and Ranging (LIDAR) unit — learn how the components work and interact with each other, and put them together and program them in stages to add increasingly complex capabilities.

Photographer: Mihir Bhatt/ASU

When Andert took the class in 2012, he created an 8-by-8 LED panel that lit up and allowed the user to play simple games with the integration a Nintendo Wii Remote. He says the updated Embedded Microprocessor Systems course focuses less on reprogramming a difficult-to-use, decade-old processor and learning protocols hardly used today, and more on the types of processors, protocols, sensors and other system parts used in smartphones, autonomous vehicles and other applications students are likely to see in their future careers.

Shrivastava designed the new version of the course to holistically teach three critical parts to design a cyber-physical system: parts of the system; processing and communication; and feedback control.

First, students learn how batteries, sensors and motors work within a system. Next, students learn about processors, protocols and programming similar to the original course that solely focused on computing. And last, students learn the systematic and continuous approach to getting a system to behave how they want.

Putting design skills to the test

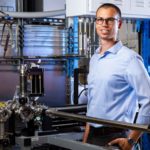

Teaching assistant Mohammadreza Mehrabian picks up a car to see if the car would correct its wheel positioning when taken off course. Photographer: Mihir Bhatt/ASU

On April 1, students had their demonstration of the fifth of seven projects in the course in which they used feedback control to drive the car to make a figure 8 with circles of two different diameters. Students had to continuously read the IMU sensor to figure out if the car was deviating from its course, and continuously correct it, so that it stays on the correct path. It should work correctly, even if the car is momentarily taken off course. To test this, the teaching assistant Mohammadreza Mehrabian picked up the car, and when he kept it in the air, it should try to right its course by correctly turning the wheels.

Most of the students successfully completed the project and they learned firsthand a valuable lesson in feedback loops.

“Once they started trying, they quickly realized that it is difficult to make the car drive in figure 8 without a feedback loop,” Shrivastava says. “They applied feedback control and tuned it’s parameters to be able to control the car. They saw how effective and useful feedback control is to make the system perform the way they want to.”

Next, on April 15 they’ll be demonstrating GPS-based waypoint navigation, and on April 22 they will use LIDAR to avoid obstacles. Finally, on April 29 teams will race from one point to another while avoiding obstacles to vie for the fastest time.

Extend National Robotics Week to all of April — get a dose of robotics to start your Friday mornings April 15, 22 and 29 and watch these demos in front of Old Main from 9–10 a.m.

Media Contact

Monique Clement, [email protected]

480-727-1958

Ira A. Fulton Schools of Engineering