Cloud computing performance, satisfaction guaranteed

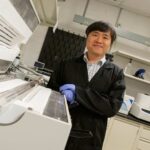

Ming Zhao thinks cloud computing should deliver computing power just as electric companies deliver electricity — like a utility. And Zhao believes, like other services we use on a daily basis, cloud computing should come with a performance guarantee.

The complexity of the cloud has historically made it difficult for cloud service providers such as Amazon Web Services to provide any sort of performance guarantee, says Zhao, an associate professor of computer science at Arizona State University’s Ira A. Fulton Schools of Engineering.

However, for Zhao’s 2013 National Science Foundation CAREER Award proposal, “Coordinated QoS-Driven Management of Cloud Computing and Storage Resources,” he wanted to make that all change.

A perfect storm of computing complexity

Unlike peak electricity usage times during Arizona summers, peak times for cloud computing resources can be unpredictable. And unlike your home’s personal air conditioner, the hardware running the cloud is a shared infrastructure.

Consider Phoenix-area utilities preparing to power millions of air conditioning units on the hottest day of the year as the equivalent of an online retailer preparing for Cyber Monday.

Without cloud computing, that online retailer would need to purchase enough computer hardware to handle the highest traffic weekend of the year — a prohibitive cost for all including even the largest companies in the market.

Instead, cloud computing service providers sell shared computing and storage resources to many companies. But with unpredictable traffic and resource needs among all their customers, cloud service providers can’t guarantee their customers the same level of performance as they could if each company had its own dedicated computing infrastructure to run its applications.

“Each application isn’t run by itself in the cloud,” Zhao explains. “It’s sharing and competing for resources with other applications. Interference is intrinsic among them. Applications can affect each other.”

In 2013, Zhao set out to understand what types of resources different cloud computing applications require — both computing and storage — and to predict the demand on these resources.

Zhao created new performance modeling techniques that quickly capture applications’ complex behaviors and accurately predict their resource demands. Fast modeling and prediction allow his solution to adapt to the dynamic changes in cloud applications and systems.

Then once he had a good understanding of clients’ resource demands, he began optimizing the allocation of cloud resources to applications — a necessary component for cloud computing to provide quality of service guarantees while still being cost effective.

A silver lining to the future of cloud computing guarantees

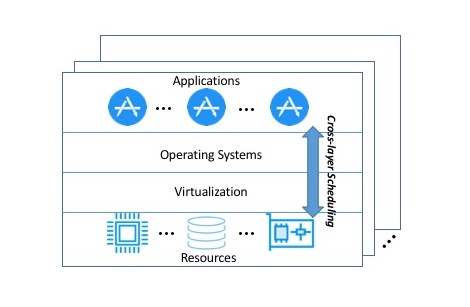

Cloud computing achieves its scalability and cost-effectiveness through virtualization. Virtualization creates multiple digital instances of a particular physical resource such as a server in order to better share it among all the competing applications running in the cloud. But virtualization also makes it harder for cloud computing to provide performance guarantees because it creates a barrier between the cloud applications and the cloud resource managers.

Zhao’s research team developed a cloud computing management system that helps better coordinate the use of resources to reliably deliver performance.

Through a method called cross-layer scheduling, Zhao’s management system allows cloud computing digital task managers called schedulers to break through the boundaries of virtualization to more effectively time tasks across the layers of the cloud computing architecture.

Horizontally, the cloud computing architecture is comprised of computing, storage and networking resources that each have a scheduler that manages tasks for its given resource. Vertically, cloud computing has hardware, system software and customer applications. Graphic courtesy of Ming Zhao

Cross-layer scheduling allows for better understanding of applications’ resource needs and better enforcement of resource allocation decisions, thus making it easier to deliver the right amount of resources at the right time for performing any given work in the cloud.

“We have demonstrated it’s possible to provide strong quality of service guarantees to real-world cloud computing applications,” Zhao says. “Our research outcomes are practically useful and we have seen the adoption of our technologies by real-world cloud computing providers.”

Zhao’s team developed the techniques with the help of industry partners. Their techniques are open source and available for the cloud computing providers to implement themselves.

Clouds — atmospheric or digital — don’t stay still for long

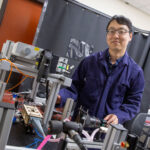

Cloud computing is a young field that’s constantly evolving. While emphasis for the past decade has been in the far-off cloud — data centers scattered throughout the world — “today cloud computing is expanding its scope and expanding its reach to the edge,” Zhao says. In other words, to our smartphones and other internet-connected devices.

“[Cloud computing involves] hundreds of millions of computers in many data center buildings globally,” Zhao says. “Now that we are reaching the edge, we have billions of devices in the picture.”

Though it adds another dimension of complexity to Zhao’s research, he’s eager to take on the challenge and see what this new aspect can do for cloud computing performance and capabilities.

“As a cloud computing researcher, I find [edge computing] very interesting. When computing is distributed between the cloud and the edge, the resource management problem now needs to be solved across the cloud and the edge, not just resources in the servers in the cloud anymore.”

Incorporating edge devices in his understanding of cloud computing resource allocation and optimization will be the next phase of his NSF CAREER Award project.

As more devices rely on cloud computing to support applications, Zhao’s cloud computing solutions may become just as crucial to users’ digital lives as utilities are to their daily lives.

Preparing the next generation of cloud computing professionals

Advances in curriculum are another important aspect of the National Science Foundation’s CAREER Award program. Ming Zhao believes he has been successful in his goals to enhance cloud computing education with his research.

Back in 2013 when he was awarded the NSF CAREER Award, there wasn’t much cloud computing in computer science curriculum other than a handful of graduate-level, seminar-style courses where students would get together and discuss research papers.

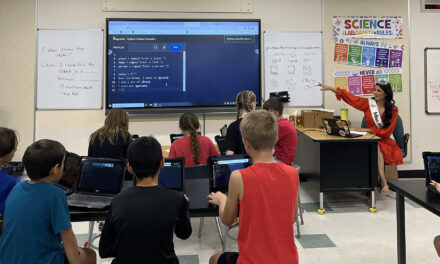

For the scope of this project, Zhao wanted to offer a systematic training on cloud computing to different levels of students, including outreach to younger students, undergraduate and graduate cloud computing courses, and more advanced distributed computing special topics courses for high-level students.

“It’s such an important field, it’s still rapidly growing and it’s only going to become more important,” Zhao says. “It’s not going to be replaced with edge computing, it always has to be there to support the edge.”

Zhao emphasizes the importance of using real-world industry examples in his courses to build on the fundamentals typically taught in academia.

“I still want to teach the fundamentals — what enable cloud computing, to understand the nuts and bolts of cloud computing — so students will not only be users of cloud computing, but contributors to the next generation of cloud computing,” Zhao says.

Zhao uses materials developed in his research to teach fundamentals and works with cloud computing companies to provide interesting projects for his students. He also organizes cloud computing hackathons for the students to compete by proposing and implementing their own ideas with mentoring from cloud computing engineers at companies such as IBM.

He has trained multiple graduate and doctoral students who are almost all now working in the cloud computing industry, including Amazon, cloud computing startups and other industry companies. He has also mentored about 60 undergraduate students in cloud computing research.

A large number of Zhao’s students are from underrepresented groups, including many female and Latino students. He makes a great effort to include students with diverse backgrounds in his CAREER Award project, as he strongly believes training a diverse workforce is important to continuing advancements in the cloud computing field.

To introduce K-12 students to cloud computing, he held workshops in which students took apart devices like laptops, tablets and smartphones to show them what the devices look like inside and how they could run computer games like Minecraft on the Amazon Web Services cloud by hosting their own virtual game servers.