A slam dunk for machine learning

It picks up the ball, carefully lines up its shot and in one fluid movement, releases its projectile. The robot’s arms remain in the air expectantly as the ball bounces off the rim and drops out of sight. You can almost sense the robot’s dejection as its lifeless, Kinect-powered eyes stare blankly at its missed target.

This, of course, is humanity’s knack for personification taking over. The robot isn’t upset when it misses, nor is it elated when it finally sinks a basket. However, it is doing something humans do.

The robot is learning.

Though machine learning — a type of artificial intelligence that enables a computer to learn without explicit programming — isn’t new, the speed at which this robot learns breaks new ground in robotics. While many robots can take anywhere from two to three days to learn how to execute a given task, one Arizona State University engineer has created an algorithm that allowed his basketball-throwing robot to learn how to sink a shot in a matter of hours.

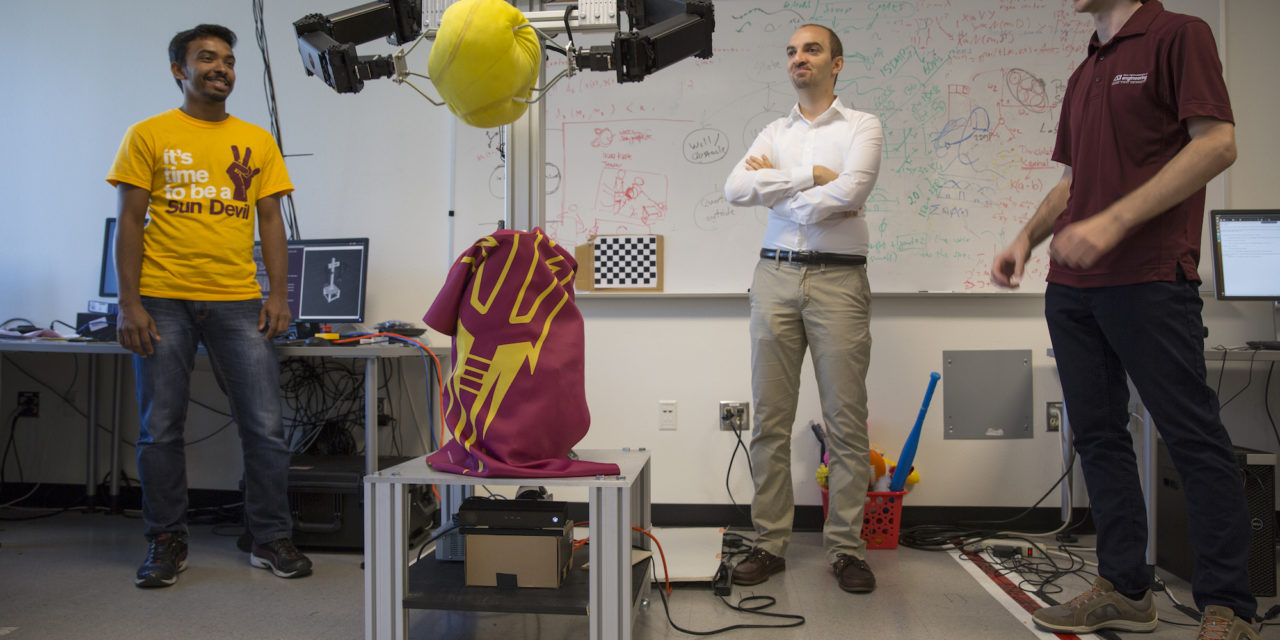

Advancing machine learning

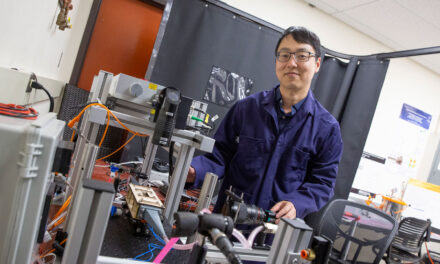

Heni Ben Amor, left, an assistant professor of computer science and Kevin Sebastian Luck, a computer science doctoral student, watch as their robot tosses a ball. A simple task for a human, accurately throwing a ball can take most robots up to three days to master. But a new algorithm developed by Ben Amor has reduced that learning time to a matter of hours. Photographer: Jessica Hochreiter/ASU

Heni Ben Amor, an assistant professor of computer science at ASU’s Ira A. Fulton Schools of Engineering, created the algorithm in light of the many drawbacks that currently plague machine learning.

For instance, a robot can learn a task through imitation learning, or watching a human perform a task.

“This approach has limitations, however,” says Ben Amor, who heads the ASU Interactive Robotics Laboratory. “Humans and robots have different configurations, for starters.”

A human arm does not function the same as a robot’s, so imitation can only take a robot so far. Human bodies work synergistically, our muscles in concert, while an autonomous agent’s limbs bend and twist in isolation. Robots often have a different number of joints and points of articulation, and there’s certainly no motor whirring away, powering a human’s movements.

“There is also reinforcement learning — or trial and error,” says Ben Amor. “This too, has its limitations, because it can take thousands, perhaps millions of trials for a robot to learn exactly what it needs to do to accomplish a task.”

Ben Amor’s algorithm, called “sparse latent space policy search,” enables a robot to first understand the coordination between its different joints, parts and movements. Through this, the robot gradually eliminates unsuccessful solutions to arrive at a successful one.

The algorithm is technically a form of reinforcement learning, albeit at a much faster pace.

“In a sense, this algorithm is linked to how humans learn — this project is not making a biological statement, it simply mirrors how we approach a problem,” says Ben Amor. “We innately understand the relationship between our different joints and synergistic movements, but this is something robots need to learn.”

Having a ball

Sinking a free-throw might not seem like a particularly valuable task for a robot to master, but Ben Amor identified the action to address another set of issues with machine learning and robot coordination.

“Many robot coordination challenges simply employ a single arm, such as playing table tennis or cup-in-ball,” says Ben Amor. In pursuit of robots with more sophisticated actions, he chose throwing a basketball as his target for a two-armed coordinated action.

Furthermore, throwing a basketball tackles the issue of dynamic movement. Often, robots will break a task down into a series of movements: pick up an object. Rotate the object. Move it five feet to the right. Lower the object toward a receptacle. Release the object.

Throwing a basketball cannot be accomplished in starts and stops — it requires a burst of dynamic motion, an elusive feat for many learning robots.

“It requires a robot to dynamically apply force at the right time, straying away from the ‘divide and conquer’ approach common in computer science and machine learning,” says Ben Amor.

Programming versus learning

Yash Rathore, a computer science graduate student, pores over the code that enables Heni Ben Amor’s robot to learn so quickly. Ben Amor believes his algorithm has the potential to improve industrial processes. Photographer: Jessica Hochreiter/ASU

Some might wonder: why teach a robot anything? Why don’t we just program them to perform desired actions?

In short, it’s inefficient to program each individual movement, accounting for different situations and variables.

“For example, if you want to change the weight of the ball or the distance to the hoop, a programmer would have to first evaluate the physics, kinematics and mathematics of the change, and then program the robot accordingly,” says Ben Amor.

In comparison, Ben Amor can switch up the ball’s weight or hoop’s height and the robot can make all of those adjustments on its own in three hours or less — no reprogramming necessary.

“Michael Jordan wasn’t born a legend, although the ability to become a superstar was part of his innate self,” notes Ben Amor. “A key ingredient for his success was training, and if he were to find himself using a heavier basketball or shooting to a higher hoop, he would need to relearn — especially because he’d have to overcome muscle memory.”

Likewise, Ben Amor’s robot is able to make similar adjustments through learning.

In an industrial situation, this can be an invaluable asset in a robot. Rather than requiring a team to completely reprogram the robot every time the product changes on an assembly line, a process that can take days or more, the robot can make the adjustment itself with just a few hours of learning.

This rapid learning method could also help integrate advanced technologies into healthcare.

“Often you see robotics and other cutting-edge technologies not get adopted in the medical industry because of the time investment required for setup and maintenance,” says Ben Amor. “A robot that requires a lot of time to learn an action is no exception, but lowering the time needed to do so could lead to more widespread use.”

Ultimately Ben Amor wants to create robots capable of machine learning that can quickly adapt to complement their human partners.

“Robots and humans excel in different areas. I want to create symbiotic human-robot interactions, using the best capabilities of robots with that of humans.” says Ben Amor. “Robots are fast, strong and efficient in what they do and humans have high-level cognitive abilities. The goal is to bring them together and create a new capability that wasn’t there before.”

Additional reporting by Terry Grant contributed to this article.

Media Contact

Pete Zrioka, [email protected]

480-727-5618

Ira A. Fulton Schools of Engineering